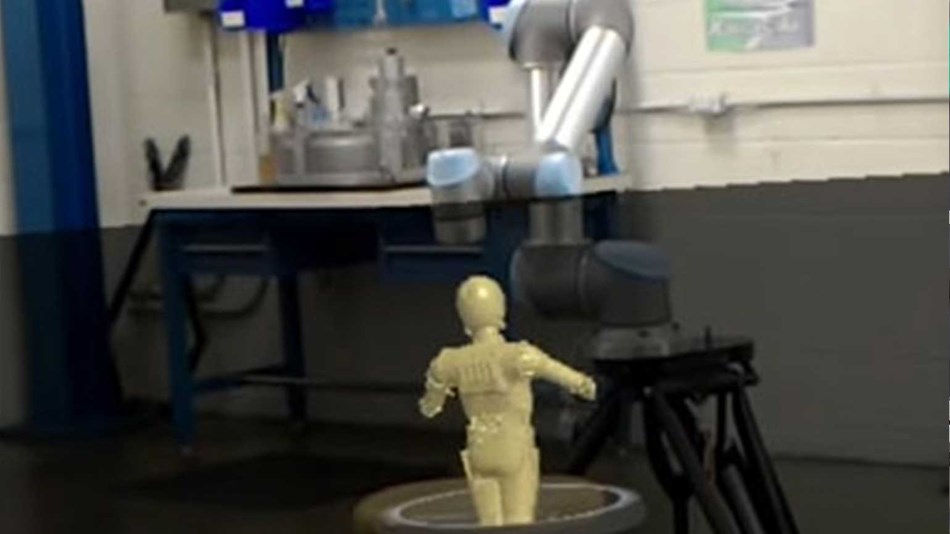

Augmented reality (AR) technology, usually incorporated into special headsets, eyewear, or projections, superimposes data or graphics over real-world images and uses sensors and cameras to capture the operator’s motions for feedback and control. Until now, AR’s primary application has been in gaming. But as the technology has become commoditized, it’s now finding a surprising new role in robotics research, and it may soon have a huge impact on manufacturing and logistics automation, and eventually even home and service robots.

Robot programming was traditionally done by writing code, which was time-consuming and expensive. That meant that robots were programmed for a single specific task that they did over and over. Cobots made programming much easier by letting even untrained operators simply move the robot arm as desired and use a teach pendant to set waypoints and actions. This makes programming more intuitive and flexible, so the robot can be quickly reprogrammed for new tasks. But while the robot’s movements are precise and consistent, they’re generally not as smooth or as fast as human movements, and the robot still only knows how to perform the exact tasks it’s been programmed for.

AR changes the game

It allows a human operator to get inside the robot’s head, so to speak. The operator uses AR to control the robot using natural, smooth movements, giving the robot precise instructions simply by doing the tasks he or she wants the robot to emulate. This new approach is ideal for cobots, which allow human operators to work directly with the robot arm without the interference of safety cages or fencing.

Embodied Intelligence uses AR to help robots learn complex tasks

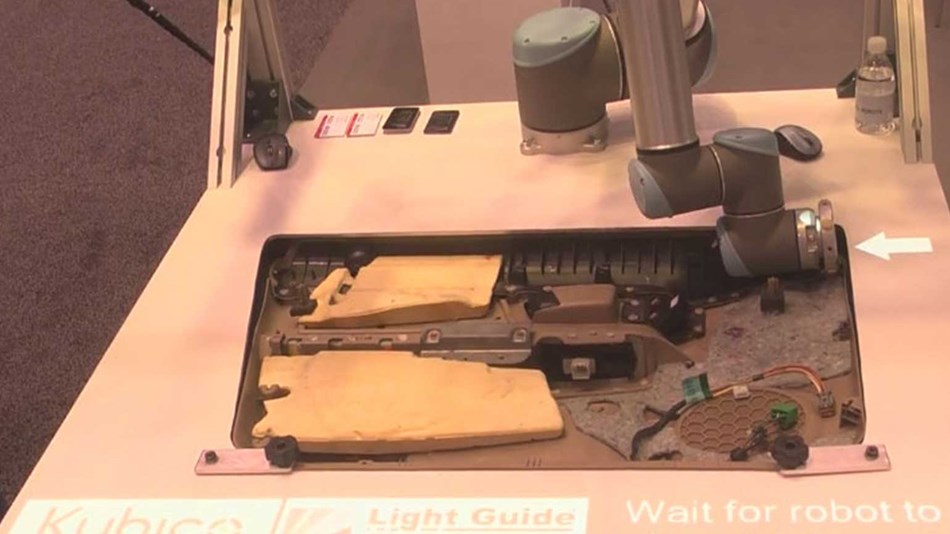

A new startup called Embodied Intelligence is banking on AR as a way to support machine learning that will greatly expand robots’ abilities. In a NY Times article, Embodied founder Pieter Abbeel explains that robot hardware is already nimble enough to mimic human behavior; the challenge is creating software to guide the hardware. The Embodied team has been using a UR3 cobot in its research with tele-operation – using AR to control the robot – to help robots learn new skills without the need for direct programming. Tele-operation gives the robot enormously more data more quickly than any other process. The robot can apply this data to imitate, develop, and reinforce skills such as picking complex shapes out of bins, performing inconsistent tasks such as plugging in wires or cables, and manipulating deformable and unpredictable objects. Abbeel told IEEE Spectrum that, “we’ve reached a point where we really believe that the time is right to start putting this into practice, not necessarily for a home robot, which needs to deal with an enormous amount of variation, but in manufacturing and logistics.”

Embodied Intelligence has already landed $7 million USD in venture funding, and the race is definitely on. As MIT Technology Review reported, other researchers at Google DeepMind and Kindred AI are also making progress in this area. And outside of the lab, manufacturers and integrators are exploring AR as well.